In the world of social media, relying on a gut feeling is a recipe for mediocrity. You might think your audience prefers videos, or that your call-to-action is compelling, but without data, it’s all just a guess.

This is where A/B testing comes in. A/B testing, or split testing, is a scientific method for comparing two versions of a social media post to see which one performs better.

It’s the single most effective way to eliminate guesswork and create a data-driven strategy that consistently boosts your engagement, reach, and ultimately, your ROI.

A/B Testing is a Marketer’s Secret Weapon:

A/B testing transforms your social media strategy from a creative gamble into a precise, optimized machine.

1. Stop Guessing, Start Knowing:

No more debating whether a carousel post will outperform a single image. A/B testing gives you a clear, data-backed winner. It provides concrete evidence of what your audience actually responds to, allowing you to double down on what works and stop wasting time on what doesn’t.

2. Optimize for Maximum Engagement:

Engagement, likes, comments, shares, and saves—is the currency of social media. A/B testing helps you find the perfect combination of copy, visuals, and hashtags that will make your content stand out and be rewarded by platform algorithms.

3. Uncover Deep Audience Insights:

Each A/B test is a window into your audience’s psychology. Over time, you’ll build a clear profile of their preferences, learning whether they respond better to questions or statements, witty humor or professional authority, bright colors or muted tones.

A 5-Step Guide to Social Media A/B Testing:

Follow this simple, repeatable process to start running effective tests and watching your engagement grow.

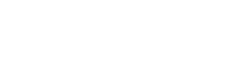

Step 1: Define Your Goal and Hypothesis

Before you do anything, decide what you want to measure. Is the goal to increase likes, comments, click-through rate (CTR), or watch time? Once your goal is clear, form a hypothesis—an educated guess about which version will win.

Example Hypothesis:

A post with a question-based headline will generate a higher engagement rate than a post with a statement-based headline.

Step 2: Choose Your Variable

The golden rule of A/B testing is to test only one variable at a time. This is the only way to know for sure what caused the change in performance.

Common variables to test:

Headlines/Copy:

Question vs. statement, long vs. short captions, use of emojis.

Visuals:

Image vs. video, carousel vs. single photo, lifestyle photo vs. product shot.

Calls-to-Action (CTAs):

“Learn More” vs. “Shop Now,” text-based CTA vs. a button.

Posting Time & Day:

Morning vs. evening, weekday vs. weekend.

Hashtags:

Number of hashtags, placement, or a specific set of niche hashtags.

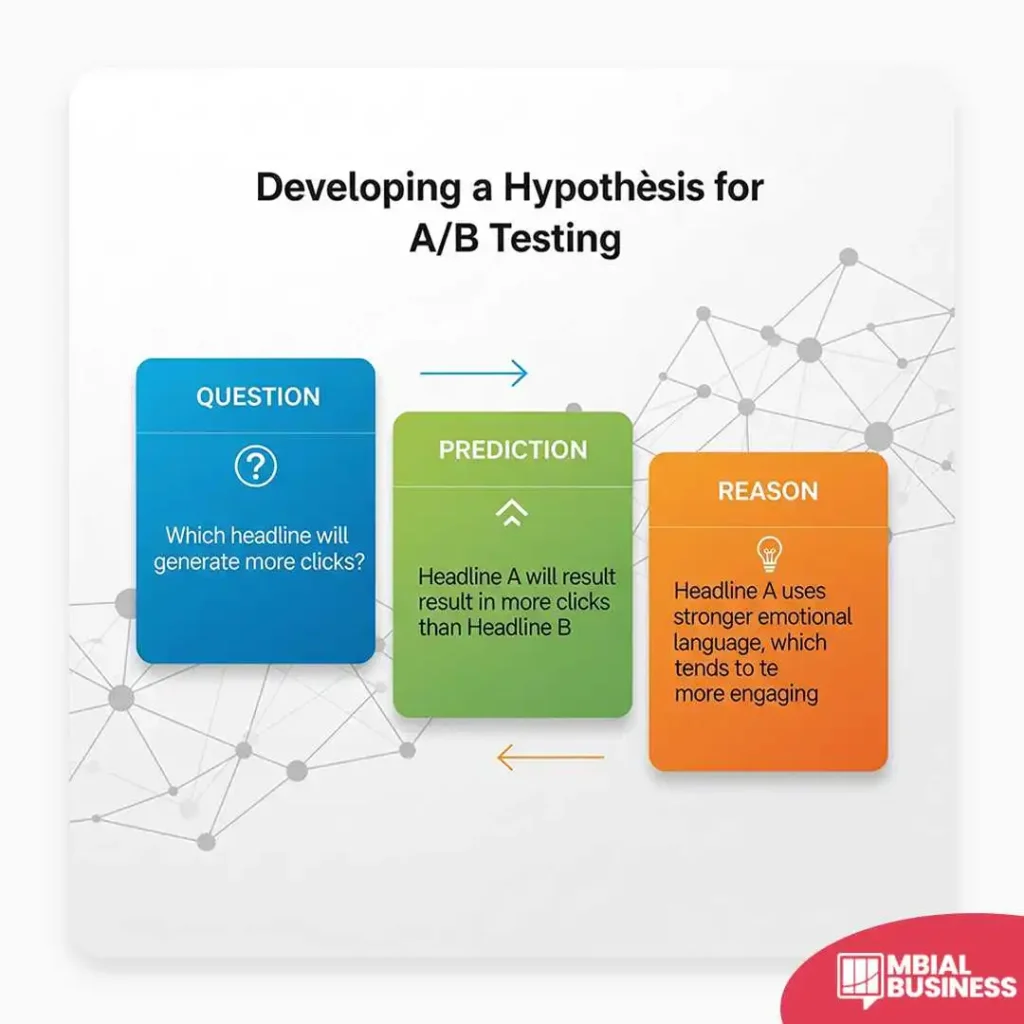

Step 3: Create Your Variations (A and B)

This is where you create two versions of your content that are identical except for the single variable you are testing.

Version A (Control):

A post with a statement-based headline.

Version B (Variant):

The exact same post, but with a question-based headline.

Important:

All other elements—the image, the CTA, the emojis—must remain the same.

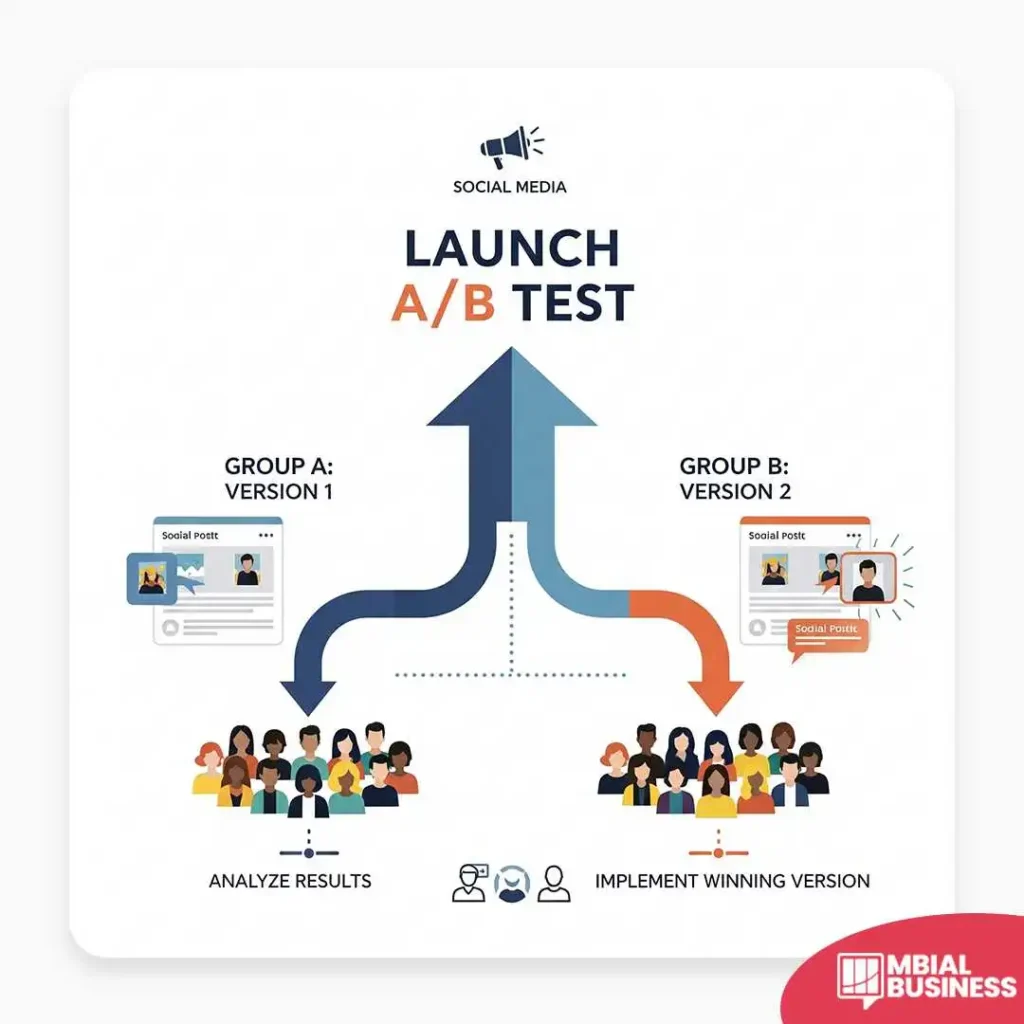

Step 4: Run the Test

The best way to run an A/B test is through a platform’s native ad manager. Platforms like Meta Ads Manager, LinkedIn Campaign Manager, and TikTok Ads Manager have built-in features that allow you to split your audience and show each group one of your versions. For organic content, you can use features like Instagram’s “Trial Reels” which tests up to four versions of a Reel on a non-follower audience.

Best Practices:

Audience Consistency:

Ensure both versions are shown to the same, equally-sized audience.

Sufficient Data:

Let the test run long enough to get statistically significant results. Aim for at least 1,000 to 5,000 impressions per version. A test running for at least 7 days will account for weekly engagement patterns.

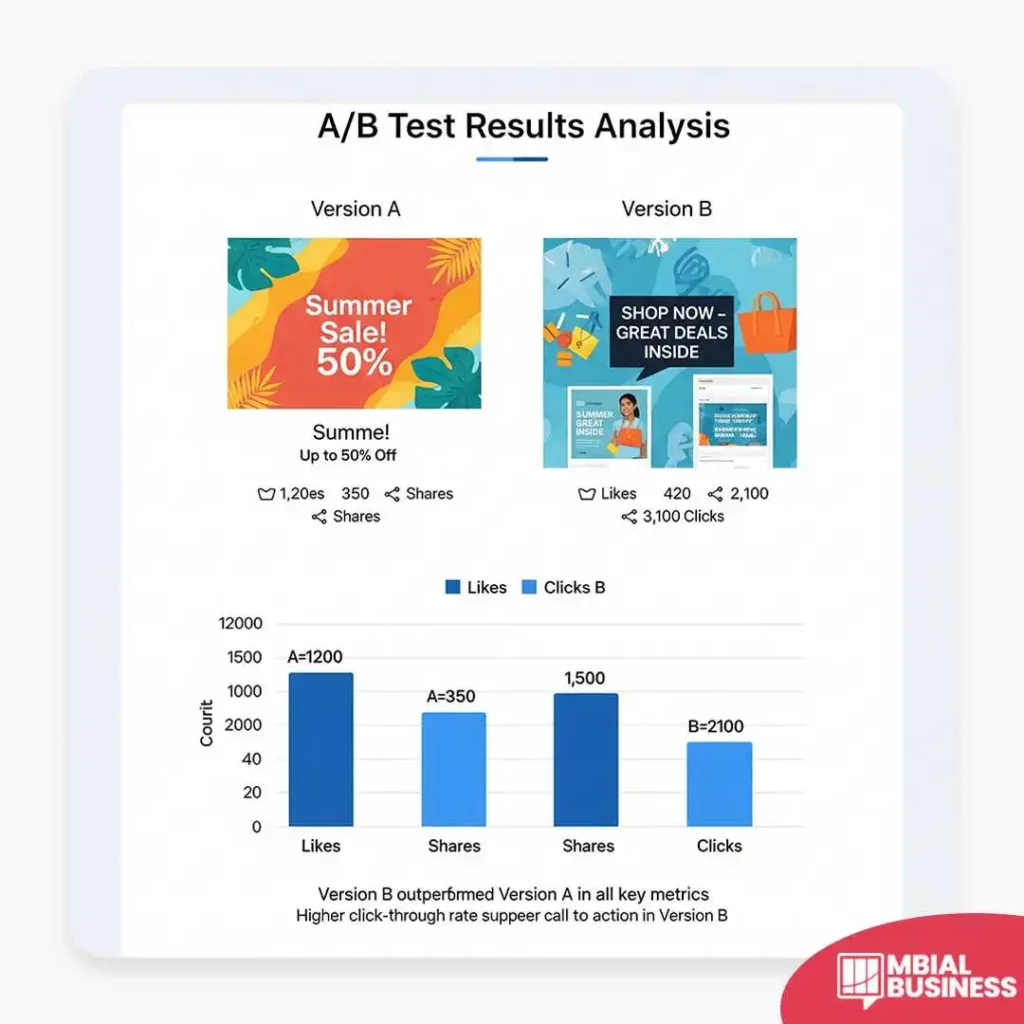

Step 5: Analyze the Results and Act

Once your test is complete, it’s time to find the winner. Look at your predefined metrics to see which variation outperformed the other.

If B Wins:

The question-based headline performed better. This is now your new best practice.

If A Wins:

The statement-based headline was the clear winner. Continue using this method.

If It's a Tie:

The test was inconclusive. This still provides a valuable lesson. It means this variable likely doesn’t have a significant impact on engagement, and you should move on to test a different element.

The Bottom Line:

A/B testing is not just a tactic; it’s a mindset. It’s the commitment to a data-driven, continuous improvement strategy.

By consistently testing your assumptions, you will learn to speak your audience’s language, create content they genuinely want to engage with, and build a social media presence that is not just popular, but truly powerful.